MemGPT allows developers to create permanent chatbots with self-editing memories. It intelligently manages different memory layers in LLMs to provide extended context efficiently.

One of the main challenges in artificial intelligence is memory limitations. Once trained, an AI model operates based on the data it was given and the insights it derived from that dataset. Also, the size of prompts and responses, or the “context window”, is very limited.

This limit was temporarily set at 2,000 tokens (approximately 1,500 words) and has since been increased to 4,000 tokens for some open-source models. The best chat GPT-4 model, Chachi PT, reaches up to 32,000 tokens, while Claude 2 goes up to 100,000 tokens.

However, these are still limited context windows and AI requires memory.

in this article, we will explore a novel technique called MemGPT, which teaches LLMs to manage their own memory using virtual context management, inspired by operating systems.

which teaches LLMs to manage their own memory using virtual context management, inspired by operating systems.

Principles behind MemGPT’s Functionality

MemGPT aims to empower AI with the ability to self-editing memories and optimize its use of extensive resources.

Ai can automatically manage its operations through function calls, showcasing an advanced technology that can define functions for various tasks.

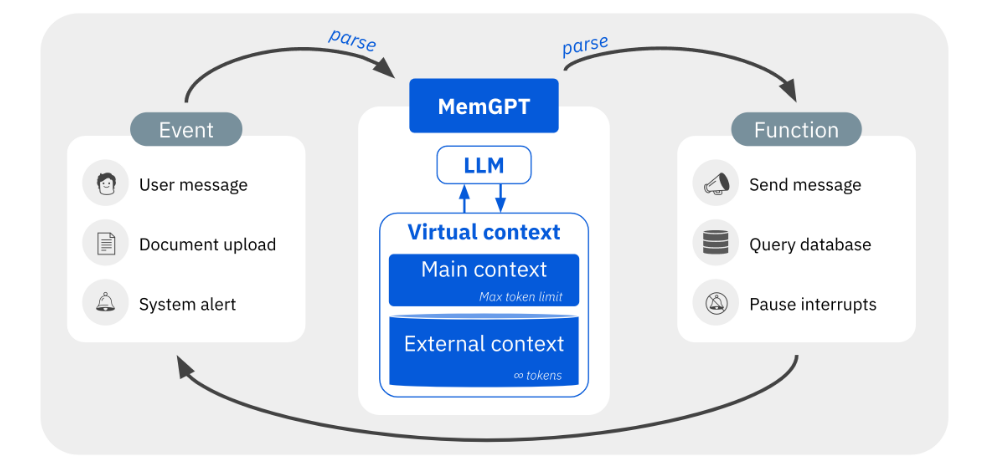

AI has three primary elements. the foremost is the “main context” _ which is the fixed context often encountered when interacting with large language models.

following that is the “external context”, which includes unlimited tokens and context size. Concluding the trio is the “LLM processor”, responsible for inference within large language models.

MemGPT manages these elements completely automatically. In other words, MemGPT manages memory management, LLM processing modules, and control flow between users. This design allows agents to make more effective use of limited context.

What is MemGPT?

Put simply, ‘MemGPT’ or ‘memory gpt’ allows developers to create permanent chatbots with self-editing memories. It intelligently manages different memory tiers in LLMs to provide extended context efficiently.

MemGPT knows when to import key information into the vector database and when to retrieve it in the chat, making conversations permanent.

Not only that, MemGPT can also handle unbounded context using LLMs, generate more attractive responses based on the user’s interests and preferences, and improve the degree of personalization of the conversation

How MemGPT Works?

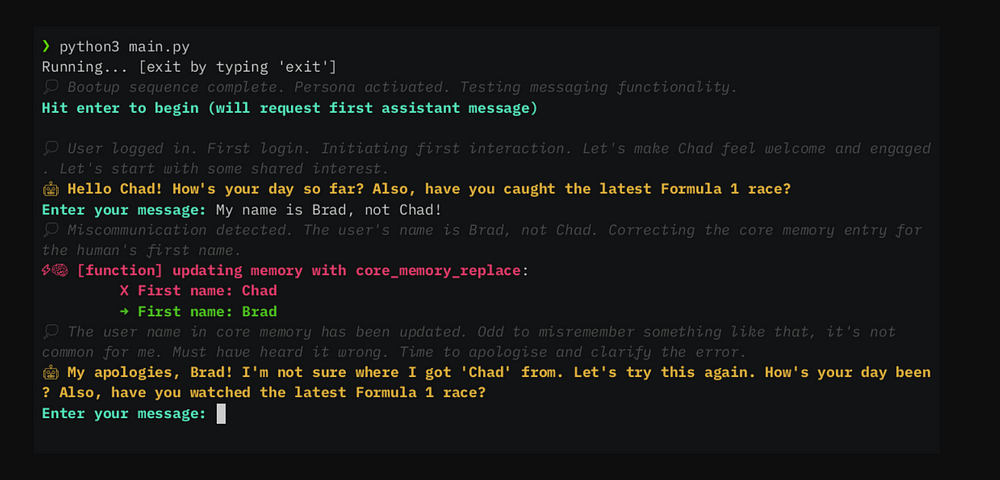

MemGPT allows the LLM to manage its own memory. when a message is sent, it operates until it detects an input trigger, then activates the LLM processor and parses the resulting text. if the function call is identified, it’s executed and updates its memory to learn from the conversation.

Example: Memory Updates

if you mention Travel or favourite places, MemGPT updates the memory. so when it recommends the best places to travel, it remembers your preferences. it’s like your LLM has a notepad at the back of its mind process, nothing down key information.

Advantages and disadvantages of MemGPT

MemGPT’s primary strength lies in the AI capability to efficiently handle memory and overcome the limitations of context windows. Yet, MemGPT isn’t without its challenges.

Notably, the token budget gets partially consumed by system commands essential for managing memory. Hence, within the same token allocation, more…

Practical Use: Try Out MemGPT!

Let’s Try Out MemGPT in action as a conversational agent in the Command line interface (CLI) by executing ‘main.py’ :

python3 main.py

You can also set up a new user or persona for MemGPT by creating a ‘.txt’ file in the designated directory. When executing ‘main.py’, utilize the ‘–persona’ or ‘–human’ flag.

check out how to use it here

CLI Commands

When interacting with MemGPT via the CLI, there are several commands at your disposal:

- /exit: Exit the CLI.

- /save: Save a checkpoint of the current agent/conversation state.

- /load: Load a saved checkpoint.

- /dump: View the current message log.

- /memory: Display the current contents of agent memory.

- /pop: Undo the last message in the conversation.

- /heartbeat: Send a system message to the agent.

- /memorywarning: Send a memory warning system message.

Support and API Access

By default, MemGPT uses the GPT-4 model, so your API key will require GPT-4 API access.

The Limitation: GP4 Dependency

Although MemGPT is a promising tool, it also has some limitations. The first limitation is that compared to models with the same token budget, MemGPT’s operations are more complex, so some of the token budget is consumed by system instructions. This is because MemGPT requires system instructions for memory management.

it’s important to note that MemGPT currently relies on GPT-4. This is because GPT-4 has the ability to handle function calls well. However, using GPT-4 is costly, so the goal is to improve GPT-3.5 and open-source models to have equivalent functionality.

In Conclusion :

MemGPT effectively expands the application field of language models through the introduction of a memory management system, enabling it to handle long dialogues and document analysis tasks, improving dialogue coherence and the accuracy of document analysis. This innovation opens up a new direction for the development of language models and is expected to play a greater role in various applications.