Hugging Face recently announced their new open-source Large language model, OpenChat, which is a fine-tuned version of OpenChat that focuses on helpfulness and outperforms many larger models on Alpaca-Eval, MT-bench, and Vicuna-bench benchmarks.

It has about 13 billion parameters that can be run easily on a laptop, answer user questions, and generate sentences.

My homepage is like my personal lab for playing around with large language models — and yeah, it’s as fun as it sounds! , it’s the new Openchat model that truly excites me.

in this article, we’re going to show you how the new OpenChat AI can Beat ChatGPT.

I highly recommend you read this article to the end is a game changer in your chatbot that will realize the power of OpenChat 3.5!

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

- Like my article 50 times; that will really help me out.👏

- Follow me on Medium and subscribe to get my latest article🫶

- Follow me on my Twitter to get a link for this article and other information about data, AI and Automation🔭

What is The OpenChat Model?

OpenChat AI is a novel framework for advancing open-source language models with mixed-quality data.

It leverages different data sources to improve language models and proposes a new method called Conditioned Reinforcement Learning Fine-Tuning that learns a class-conditioned policy to make the most of complementary data quality information.

With this framework, we can advance open-source language models with mixed-quality data and improve their performance

Key Features of OpenChat:

- Simplicity: OpenChat Ai is a simple and lightweight framework that can be applied to any mixed-quality datasets and arbitrary base language models.

- RL-free training: OpenChat uses a class-conditioned RLFT method called C-RLFT, which avoids costly human preference labeling and is lightweight.

- Minimal reward quality requirements: OpenChat uses different data sources as coarse-grained reward labels and learns a class-conditioned policy to leverage complementary data quality information.

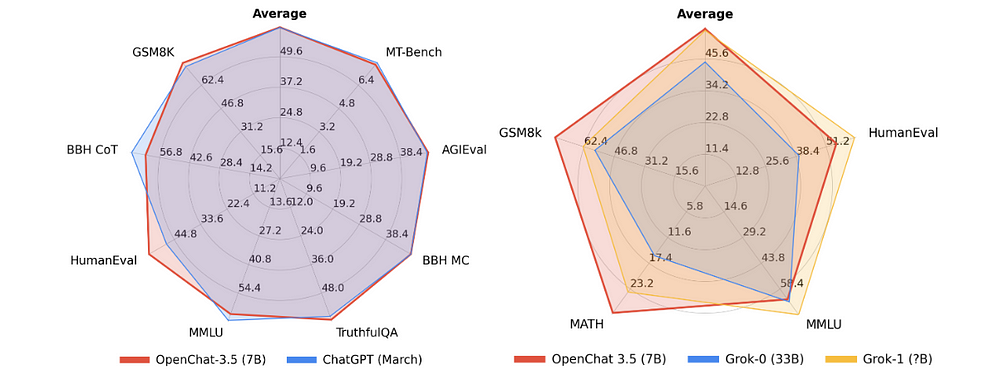

- High performance: OpenChat achieves better performance than previous 13b open-source language models and can even outperform gpt-3.5-turbo in all three benchmarks, according to the results of the experiments conducted on three standard benchmarks to assess instruction following ability, including Alpaca-Eval, MT-bench, and Vicuna-bench.

- Generalization: OpenChat also achieves the top-1 average accuracy among all 13 b open-source language models in AGIEval, demonstrating its generalization ability.

Work Flow of OpenChat

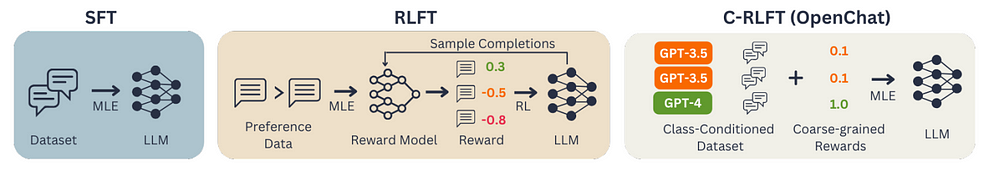

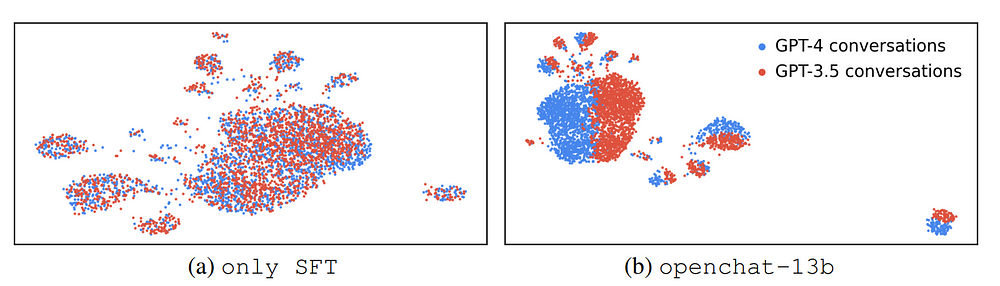

- OpenChat uses a class-conditioned RLFT method called Conditioned Reinforcement Learning Fine-Tuning to improve the performance on par with ChatGPT, even with a 7b model which can be run on a consumer GPU with mixed-quality data.

- C-RLFT regards different data sources as coarse-grained reward labels and learns a class-conditioned policy to leverage complementary data quality information.

- The optimal policy in C-RLFT can be easily solved through single-stage, RL-free supervised learning, which is lightweight and avoids costly human preference labeling.

- OpenChat considers the general non-pairwise (nor ranking-based) SFT training data, consisting of a small amount of expert data and a large proportion of easily accessible sub-optimal data, without any preference labels.

- OpenChat can be applied to any mixed-quality datasets and arbitrary base language models.

- OpenChat learns the fine-tuned LLM itself as a class-conditioned policy and regularizes it with a better and more informative class-conditioned reference policy instead of the original pre-trained LLM

What is the difference between SFT and RLFT methods?

Openchat uses two methods to align open-source base language models to specific abilities: supervised fine-tuning (SFT) and reinforcement learning fine-tuning (RLFT).

SFT methods involve training the model on a small amount of labeled data that is specific to the target task. This approach is simple and effective but requires high-quality labeled data, which can be expensive and time-consuming to obtain.

RLFT methods, on the other hand, use reinforcement learning to fine-tune the model on the target task. This approach does not require labeled data but instead relies on feedback from the environment in the form of rewards or penalties. RLFT methods can be more flexible than SFT methods but require more computational resources and can be more difficult to train.

OpenChat Vs ChatGPT

OpenChat achieves better performance than previous 13b open-source language models and can even outperform gpt-3.5-turbo in all three benchmarks, according to the results of the experiments conducted on three standard benchmarks to assess instruction following ability, including Alpaca-Eval, MT-bench, and Vicuna-bench.

Furthermore, OpenChat achieves the highest MT-bench score, even exceeding open-source language models with much larger parameters, such as llama-2-chat-70b. However, it is worth noting that the performance of OpenChat may depend on the specific dataset and task at hand.

How to Install Openchat LLM

- Prerequisite: Before installing OpenChat, make sure you have PyTorch installed on your system because OpenChat depends on it. You can install PyTorch by following the instructions on the official PyTorch website.

- Install OpenChat using pip: Once PyTorch is set up, you can install OpenChat directly from PyPI (Python Package Index) by running the following command in your terminal or command prompt:

pip3 install ochat

If you run into any compatibility issues with packages during installation, it’s recommended to try the conda method described below.

Installation with conda

- Create a new conda environment: This is to avoid conflicting with other packages you might have. Run these commands in your terminal:

conda create -y --name openchat python=3.11

conda activate openchat

This creates a new environment named openchat and activates it.

2. Install OpenChat in the conda environment: With the environment activated, use pip3 to install OpenChat:

pip3 install ochat

If you prefer to install OpenChat from the source, you will need to clone the repository from GitHub and then install it using pip. However, the specific commands for this method aren’t provided in your excerpt.

Else See: how Powerful AutoGen is Reshaping LLM

Conclusion :

OpenChat’s primary focus is on enhancing instruction-following capabilities rather than generating text or writing code and demonstrates notable advantages such as simplicity, RL-free training, and minimal reward quality requirements. Despite these encouraging results, there are potential research areas for further improvement, such as refining the assigned coarse-grained rewards and exploring the application of OpenChat towards improving the reasoning abilities of LLMs.

Reference :

-

- https://github.com/imoneoi/openchat

- https://arxiv.org/abs/2309.11235