in this Article, I have a super quick tutorial for you showing how to create an AI for your PDF with LangChain, rag Fusion and GPT-4o to make a powerful Agent Chatbot for your business or personal use.

rag fusion improves traditional search systems by overcoming their limitations through a multi-query approach

GPT-4o is a higher-end model of GPT-4 Turbo announced on May 14, 2024.

The “o” in GPT-4o is an abbreviation for omni.

Omni is a Latin prefix that means “ all, whole, omnidirectional .”

Therefore, when compared to GPT-4 Turbo, which until now could only handle text and images, it can be said to be a ` `multimodal AI that can also input voice .’’

However, it is only partially possible to meet user needs with RAG, and further evolution is required. In this Story, we will focus on “RAG Fusion”, how the Retrieval Augmented Generation Fusion process works, and what the features of GPT-4o.

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

- Clap my article 50 times; that will really help me out.👏

- Follow me on Medium and subscribe for Free to get my latest article🫶

- Follow me on my YouTube channel

What is the Rag Fusion?

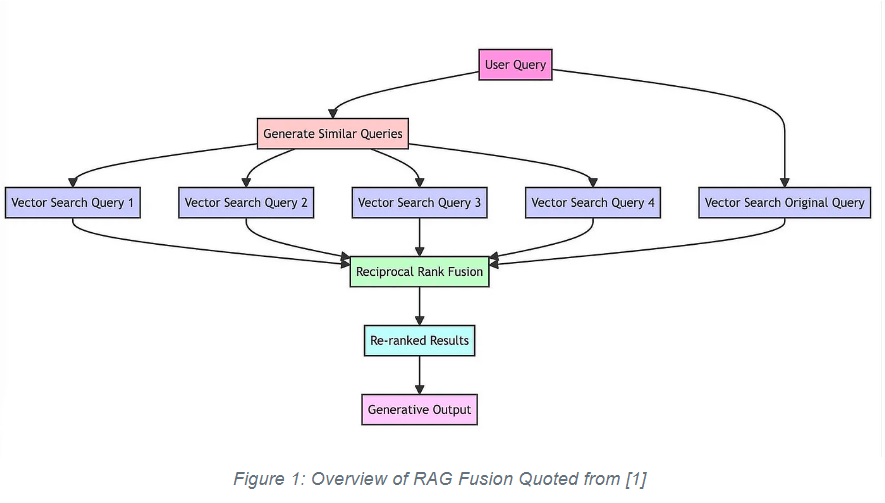

The idea is to generate multiple queries derived from the original query, re-rank (order) the search results for each query using an algorithm called Reciprocal Rank Fusion, and extract those with a high degree of relevance.

RAG Fusion flow

The method is very simple. The key points are to first have LLM generate similar queries (1. above) and to rerank the chunks obtained by vector search for each query (3. above).

By generating multiple similar queries in step 1, you can pick up a wide range of related chunks when performing a vector search. Also, by performing reranking in step 3, you can preferentially select chunks that rank high in many queries.

Compared to RAG, which only uses normal vector search, processing time and costs are increased because the step of generating similar queries involves contacting the LLM. However, since similar query generation requires fewer input and output tokens, the increase in processing time and cost is small.

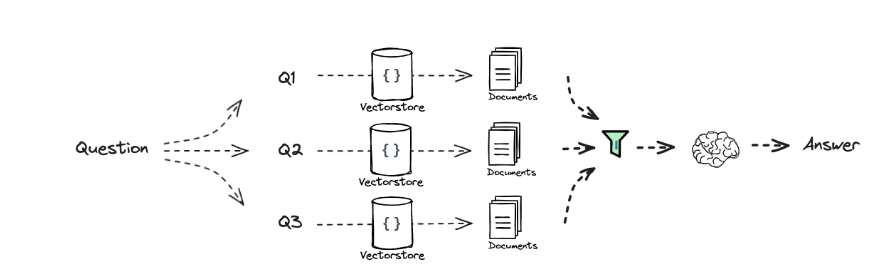

Why use multiple queries?

In traditional search systems, users often enter a single query to find information, but this approach has limitations. A single query may not capture the full scope of what your users are interested in, or may be too narrow to provide comprehensive results. Therefore, it is important to generate multiple queries from different perspectives.

What is the Difference Between RAG and RAG Fusion?

The key difference between RAG and rag Fusion lies in their approach to query generation and result processing. While RAG relies on a singular query input, rag Fusion amplifies this by generating multiple queries, providing a richer context for the search.

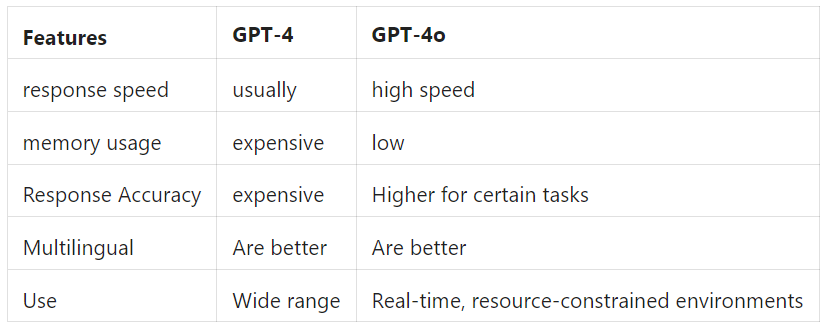

What Difference Between GPT-4 and GPT-4o?

GPT-4 is used in a wide range of applications, including creative writing, complex data analysis, and customer support automation,

and is particularly effective in situations where response accuracy is required.

On the other hand, GPT-4o is ideal for use in chatbots, interactive applications that require real-time processing, and resource-constrained IoT devices.

Performance comparison

The table below compares the performance of GPT-4 and GPT-4o.

Disclaimer: This time, I tried implementing rag Fusion using Langchain, following the above flow. I have slightly modified the code based on a repository.

Let’s get started to get started, you do need to download a couple of different Python libraries, namely pypdf,chromadb, langchain_openai, and Langchain, operator, and argparse if you haven’t already done so can simply type

pip install -r requirements.txt

once you have done that let’s head on over to a Python file we will be making use of all Python library

"""

RAG Fusion sample

"""

import argparse

import os

import sys

from operator import itemgetter

from langchain.load import dumps, loads

from langchain_community.document_loaders import PyPDFLoader

from langchain_community.vectorstores import Chroma

from langchain_core.documents.base import Document

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate, PromptTemplate

from langchain_core.retrievers import BaseRetriever

from langchain_core.runnables import RunnableLambda, RunnablePassthrough

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_text_splitters import TokenTextSplitter

let’s set up to perform document retrieval and query tasks using GPT-4o API. Then we Set up EMBEDDING_MODEL to text embedding 3

We initialise an argument parser object which will be used to handle command-line arguments provided to the script.

Define Retriever options

- TOP_K: Specifies the number of top results to return from a search or retrieval operation.

- MAX_DOCS_FOR_CONTEXT: The maximum number of documents to use for providing context in a retrieval or query response.

- DOCUMENT_PDF: Copy the path of local,

# LLM model

LLM_MODEL_OPENAI = "gpt-4o"

EMBEDDING_MODEL = "text-embedding-3-small"

# argparse

parser = argparse.ArgumentParser()

parser.add_argument('-q', '--query', help='Query with RRF search')

parser.add_argument('-r', '--retriever', help='Retrieve with RRF retriever')

parser.add_argument('-v', '--vector', help='Query with vector search')

# Retriever options

TOP_K = 5

MAX_DOCS_FOR_CONTEXT = 8

DOCUMENT_PDF = "2402.03367v2.pdf"

# .env

os.environ['OPENAI_API_KEY'] = 'Your_API_KEY'

create a template prompt

my_template_prompt = """Please answer the [question] using only the following [information]. If there is no [information] available to answer the question, do not force an answer.

Information: {context}

Question: {question}

Final answer:"""

create a function called load and split document, Read the text documents from ‘pdf’ then split the documents into chunks

def load_and_split_document(pdf: str) -> list[Document]:

"""Load and split document

Args:

url (str): Document URL

Returns:

list[Document]: splitted documents

"""

# Read the text documents from 'pdf'

raw_documents = PyPDFLoader(pdf).load()

# Define chunking strategy

text_splitter = TokenTextSplitter(chunk_size=2048, chunk_overlap=24)

# Split the documents

documents = text_splitter.split_documents(raw_documents)

# for TEST

print("Original document: ", len(documents), " docs")

return documents

we create a function name vector retriever, load and split the document, create the Text embedding and store them in a database, we can add new information to the same by passing the new information to the vector database.

def create_retriever(search_type: str, kwargs: dict) -> BaseRetriever:

"""Create vector retriever

Args:

search_type (str): search type

kwargs (dict): kwargs

Returns:

BaseRetriever: Retriever

"""

# load and split document

documents = load_and_split_document(DOCUMENT_PDF)

# chroma db

embeddings = OpenAIEmbeddings(model=EMBEDDING_MODEL)

vectordb = Chroma.from_documents(documents, embeddings)

# retriever

retriever = vectordb.as_retriever(

search_type=search_type,

search_kwargs=kwargs,

)

return retriever

Next, we perform a vector search for each query to find similar documents, followed by a re-ranking of these documents. For re-ranking, we use Reciprocal Rank Fusion (RRF), which relies on similarity ranks.

The score of a document d in Reciprocal Rank Fusion, 𝑅𝑅𝐹(𝑑), is calculated based on the rank of document 𝑑, denoted as 𝑟𝑎𝑛𝑘𝑖(𝑑), and the hyperparameter k.

The hyperparameter k affects the Reciprocal Rank Fusion (RRF) scoring: the higher the value of k, the flatter the score curve, which increases the influence of documents with lower ranks. Typically, k=60 is often used, and I am adopting this value in my current analysis.

The function to calculate Reciprocal Rank Fusion is named reciprocal_rank_fusion. For testing purposes, I am displaying the RRF scores, but I am only returning a list of document chunks because the content of these chunks is all that needs to be passed as context to the LLM.

Since each of the four similar queries retrieves five chunks, the total number of chunks can reach 20. However, as this creates an excessive amount of context, only the top-ranked chunks are used. The maximum number of documents passed as context, MAX_DOCS_FOR_CONTEXT, is set to 8.

def reciprocal_rank_fusion(results: list[list], k=60):

"""Rerank docs (Reciprocal Rank Fusion)

Args:

results (list[list]): retrieved documents

k (int, optional): parameter k for RRF. Defaults to 60.

Returns:

ranked_results: list of documents reranked by RRF

"""

fused_scores = {}

for docs in results:

# Assumes the docs are returned in sorted order of relevance

for rank, doc in enumerate(docs):

doc_str = dumps(doc)

if doc_str not in fused_scores:

fused_scores[doc_str] = 0

fused_scores[doc_str] += 1 / (rank + k)

reranked_results = [

(loads(doc), score)

for doc, score in sorted(fused_scores.items(), key=lambda x: x[1], reverse=True)

]

# for TEST (print reranked documentsand scores)

print("Reranked documents: ", len(reranked_results))

for doc in reranked_results:

print('---')

print('Docs: ', ' '.join(doc[0].page_content[:100].split()))

print('RRF score: ', doc[1])

# return only documents

return [x[0] for x in reranked_results[:MAX_DOCS_FOR_CONTEXT]]

The next function query_generatorgenerates similar queries. It works by inserting the original query, original_queryinto a prompt and asking the LLM (Language Learning Model) to generate a similar query. The original code only used the prompt: ‘Generate multiple search queries related to: {original_query}’. This approach produced fairly broad queries.

To narrow down the results, I have updated the prompt to include a directive: ‘When creating queries, please refine or add closely related contextual information in Japanese, without significantly altering the original query’s meaning.’

def query_generator(original_query: dict) -> list[str]:

"""Generate queries from original query

Args:

query (dict): original query

Returns:

list[str]: list of generated queries

"""

# original query

query = original_query.get("query")

# prompt for query generator

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant that generates multiple search queries based on a single input query."),

("user", "Generate multiple search queries related to: {original_query}. When creating queries, please refine or add closely related contextual information in Japanese, without significantly altering the original query's meaning"),

("user", "OUTPUT (3 queries):")

])

# LLM model

model = ChatOpenAI(

temperature=0,

model_name=LLM_MODEL_OPENAI

)

# query generator chain

query_generator_chain = (

prompt | model | StrOutputParser() | (lambda x: x.split("\n"))

)

# gererate queries

queries = query_generator_chain.invoke({"original_query": query})

# add original query

queries.insert(0, "0. " + query)

# for TEST

print('Generated queries:\n', '\n'.join(queries))

return queries

Next, we have the rrf_retriever function, which performs a vector search using multiple similar queries. The function create_retriever(...) splits the document, creates a Vector Store using Chroma technology, and returns an Retriever object (details are available in the code repository).

Vector searches are conducted as follows:

RunnableLambda(query_generator)generates similar queries as previously described.- Using

retriever.map(), we perform a vector search for each of the four similar queries generated byquery_generator, including the original query. Themap()function retrieves five chunks for each query. - Finally, we apply

reciprocal_rank_fusionto re-rank the results, which will be detailed in the next section.

def rrf_retriever(query: str) -> list[Document]:

"""RRF retriever

Args:

query (str): Query string

Returns:

list[Document]: retrieved documents

"""

# Retriever

retriever = create_retriever(search_type="similarity", kwargs={"k": TOP_K})

# RRF chain

chain = (

{"query": itemgetter("query")}

| RunnableLambda(query_generator)

| retriever.map()

| reciprocal_rank_fusion

)

# invoke

result = chain.invoke({"query": query})

return result

The entire process of the rag Fusion query is defined as a function. It functions similarly to a standard RAG (Retrieval-Augmented Generation), but if you use rrf_retriever it instead of a standard vector search, it adapts to perform rag Fusion.

If a normal vector search Retriever is used, it will conduct a regular vector search.

The chain’s definition might seem complex, but this complexity arises because we aim to output not just the LLM’s answer but also the context provided. Essentially, the process involves simply connecting retriever → prompt → .model.

def query(query: str, retriever: BaseRetriever):

"""

Query with vectordb

"""

# model

model = ChatOpenAI(

temperature=0,

model_name=LLM_MODEL_OPENAI)

# prompt

prompt = PromptTemplate(

template=my_template_prompt,

input_variables=["context", "question"],

)

# Query chain

chain = (

{

"context": itemgetter("question") | retriever,

"question": itemgetter("question")

}

| RunnablePassthrough.assign(

context=itemgetter("context")

)

| {

"response": prompt | model | StrOutputParser(),

"context": itemgetter("context"),

}

)

# execute chain

result = chain.invoke({"question": query})

return result

we create the main function that acts as the entry point of the script, which controls the flow based on the input parameters, uses a retriever function for different types of search tasks, and finally outputs the result of these operations.

# main

def main():

# OpenAI API KEY

if os.environ.get("OPENAI_API_KEY") == "":

print("`OPENAI_API_KEY` is not set", file=sys.stderr)

sys.exit(1)

# args

args = parser.parse_args()

# retriever

retriever = RunnableLambda(rrf_retriever)

# query

if args.query:

retriever = RunnableLambda(rrf_retriever)

result = query(args.query, retriever)

elif args.retriever:

retriever = RunnableLambda(rrf_retriever)

result = rrf_retriever(args.retriever)

sys.exit(0)

elif args.vector:

retriever = create_retriever(

search_type="similarity",

kwargs={"k": MAX_DOCS_FOR_CONTEXT}

)

result = query(args.vector, retriever)

else:

sys.exit(0)

# print answer

print('---\nAnswer:')

print(result['response'])

if __name__ == '__main__':

main()

Conclusion :

I tried Rag Fusion, which uses Reciprocal Rank Fusion (RRF) for reranking.

In addition to the PDF introduced in this video, I tried loading all the PDFs on my own PDF, but my subjective evaluation is as follows.

- When the original query is simple, such as “What is 〇〇?” with only one word, it is possible to pick up surrounding information by generating similar queries, which tends to lead to a wider range of answers.

- If the original query is specific, it is not much different from normal vector search.

- When it comes to complex questions that ask multiple questions, the probability of being able to answer them without missing anything increases by breaking down the questions using similar queries.

- When generating similar queries, the width needs to be adjusted with prompts.

Since it generates similar queries, I feel that it has a great effect on enriching the final answers to simple questions.

🧙♂️ I am AI application expert! If you are looking for Generative AI Engineer, drop an inquiry here or Book a 1-On-1 Consulting Call With Me.

📚Feel free to check out my other articles:

Else See: LangGraph + Adaptive Rag + LLama3 Python Project: Easy AI/Chat For Your Docs 2024

Else See : LangGraph + Corrective RAG + Local LLM = Powerful Rag Chatbot 2024

Else See : [ollama Libraries 🦙] Run Any Chatbot Free Locally On Your Computer 2024

REFERENCE :