Jensen Huang says “ You’re not going to lose your job to AI. You’re going to lose your job to somebody who uses AI. Your company is not going to go out of business because of AI. Your company is going to go out of business because another company used AI.”

looking back at 2023 the agents were the next big thing they were supposed to eliminate millions of data entry jobs replace brokers and prevent automated tasks such as invoicing or the ability to rewrite code for applications in a different language

now it’s 2024 AI agents are proving to be more than just digital assistants in today’s video you will learn how to build a Multi-agent from scratch using Phidata and local llm

what we are building today is a multi-agent chatbot that can collect information from users and select the tool you want to use then we have three agents as research agents, which cover story research report, investment assistant Investment Analyst for Goldman Sachs tasked with writing an investment report for a crucial client, finally we have python assistance to create calculation function which might be like What is the stock price of NVDA or Write a comparison between NVDA and AMD, which one to invest as a beginner for example

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

- Follow me on Medium and subscribe for Free to get my latest article🫶

- Follow me on my YouTube channel

What is Phidata?

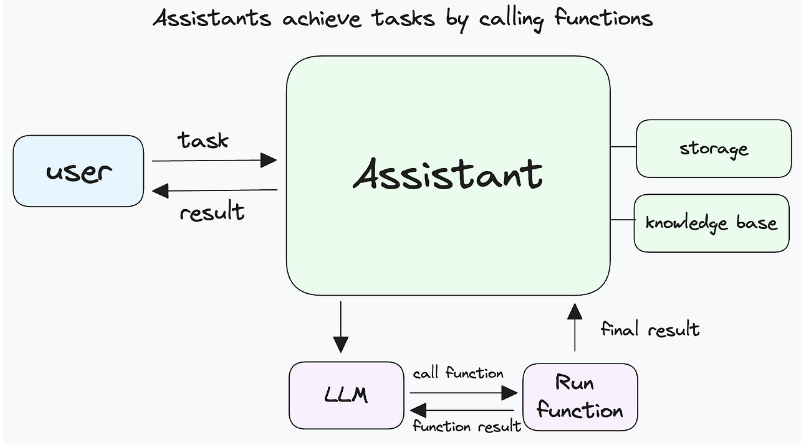

PhiData is a suite of tools used to build AI assistants using Function Calling, which allows a large-scale language model to call a function through a simple code implementation and answer the user’s question by intelligently selecting the next step based on the response.

PhiData WorkFlow :

it all starts with a simple action: a user enters a request to the AI assistant to perform an action or obtain information.

The AI assistant receives the request and passes it to the Large Language Model, or LLM, using a function call

inside the LLM, the request is analyzed to find the appropriate function that understands natural language and can perform the corresponding task

Functions are then employed to perform tasks by retrieving data from storage or interacting with other systems

The function completes its task and returns the results to the AI assistant

Finally, the AI assistant presents the results to the user, completing the journey of the request

Phidata Features :

The main Features and areas of use of Phidata are as Follows:

User-defined functions: Users can freely define the functions that the AI assistant can perform.

Easy to use: PhiData provides an interface that can be easily used even by users without programming experience.

Scalability: PhiData can be expanded to perform a variety of tasks.

Let’s Start Coding :

Install preferences and dependencies

First, we install Phidata and related libraries with the

pip install -r requirements.txt

I already have that installed. once it’s installed we will import

YFinanceTools: to retrieve stock data from Yahoo Finance

OpenAIChat: Chat with a Large Language Model

PythonAssistant: Generate Python code

DuckDuckGo: retrieve data from the web internet

Toolkit: a collection of tools

ExaTools: retrieve data from web internet

from phi.tools.yfinance import YFinanceTools

from phi.llm.openai import OpenAIChat

from phi.tools.duckduckgo import DuckDuckGo

from phi.assistant import Assistant

from phi.assistant.python import PythonAssistant

from phi.utils.log import logger

from phi.tools import Toolkit

from typing import List

from typing import Optional

from phi.tools.exa import ExaTools

from textwrap import dedent

we create a tools list to add to the agent. If ddg_search is enabled, the DuckDuckGo search tool with a fixed maximum of 3 results is added. If finance_tools is enabled, the YFinanceTools with multiple financial data retrieval capabilities is added.

# Add tools available to the Agent

if ddg_search:

tools.append(DuckDuckGo(fixed_max_results=3))

if finance_tools:

tools.append(

YFinanceTools(stock_price=True, company_info=True, analyst_recommendations=True, company_news=True)

)

we create a Python assistant to generate the Python code, If python_assistant is enabled, a PythonAssistant with specific capabilities (writing and running Python code, installing packages, using streamlit for charting) is created and added to the team. Additionally, an instruction is added to delegate Python coding tasks to this assistant.

# Add team members available to the Agent

team: List[Assistant] = []

if python_assistant:

_python_assistant = PythonAssistant(

name="Python Assistant",

llm=OpenAIChat(model=llm_id),

role="Write and run python code",

pip_install=True,

charting_libraries=["streamlit"],

)

team.append(_python_assistant)

extra_instructions.append("To write and run python code, delegate the task to the `Python Assistant`.")

we create research_assistant to retrieve data from the web and write the search reports If research_assistant is enabled, an Assistant with specific capabilities (writing NYT-quality research reports, using search_exa for research, adhering to a defined report format) is created and added to the team. Additionally, an instruction is added to delegate research report tasks to this assistant and to ensure the report is returned in the specified format without extra text.

if research_assistant:

_research_assistant = Assistant(

name="Research Assistant",

role="Write a research report on a given topic",

llm=OpenAIChat(model=llm_id),

description="You are a Senior New York Times researcher tasked with writing a cover story research report.",

instructions=[

"For a given topic, use the `search_exa` to get the top 10 search results.",

"Carefully read the results and generate a final - NYT cover story worthy report in the <report_format> provided below.",

"Make your report engaging, informative, and well-structured.",

"Remember: you are writing for the New York Times, so the quality of the report is important.",

],

expected_output=dedent(

"""\

An engaging, informative, and well-structured report in the following format:

<report_format>

## Title

- **Overview** Brief introduction of the topic.

- **Importance** Why is this topic significant now?

### Section 1

- **Detail 1**

- **Detail 2**

### Section 2

- **Detail 1**

- **Detail 2**

## Conclusion

- **Summary of report:** Recap of the key findings from the report.

- **Implications:** What these findings mean for the future.

## References

- [Reference 1](Link to Source)

- [Reference 2](Link to Source)

</report_format>

"""

),

tools=[ExaTools(num_results=5, text_length_limit=1000)],

markdown=True,

add_datetime_to_instructions=True,

debug_mode=debug_mode,

)

team.append(_research_assistant)

extra_instructions.append(

"To write a research report, delegate the task to the `Research Assistant`. "

"Return the report in the <report_format> to the user as is, without any additional text like 'here is the report'."

)

let’s create an investment_assistant writing Goldman Sachs-quality investment reports:

If investment_assistant is enabled, an Assistant with specific capabilities (writing Goldman Sachs-quality investment reports, using YFinanceTools for research, adhering to a defined report format) is created and added to the team. Additionally, instructions are added to delegate investment report tasks to this assistant, ensure the report is returned in the specified format without extra text, answer questions using the report information, and avoid providing investment advice without the report.

if investment_assistant:

_investment_assistant = Assistant(

name="Investment Assistant",

role="Write a investment report on a given company (stock) symbol",

llm=OpenAIChat(model=llm_id),

description="You are a Senior Investment Analyst for Goldman Sachs tasked with writing an investment report for a very important client.",

instructions=[

"For a given stock symbol, get the stock price, company information, analyst recommendations, and company news",

"Carefully read the research and generate a final - Goldman Sachs worthy investment report in the <report_format> provided below.",

"Provide thoughtful insights and recommendations based on the research.",

"When you share numbers, make sure to include the units (e.g., millions/billions) and currency.",

"REMEMBER: This report is for a very important client, so the quality of the report is important.",

],

expected_output=dedent(

"""\

<report_format>

## [Company Name]: Investment Report

### **Overview**

{give a brief introduction of the company and why the user should read this report}

{make this section engaging and create a hook for the reader}

### Core Metrics

{provide a summary of core metrics and show the latest data}

- Current price: {current price}

- 52-week high: {52-week high}

- 52-week low: {52-week low}

- Market Cap: {Market Cap} in billions

- P/E Ratio: {P/E Ratio}

- Earnings per Share: {EPS}

- 50-day average: {50-day average}

- 200-day average: {200-day average}

- Analyst Recommendations: {buy, hold, sell} (number of analysts)

### Financial Performance

{analyze the company's financial performance}

### Growth Prospects

{analyze the company's growth prospects and future potential}

### News and Updates

{summarize relevant news that can impact the stock price}

### [Summary]

{give a summary of the report and what are the key takeaways}

### [Recommendation]

{provide a recommendation on the stock along with a thorough reasoning}

</report_format>

"""

),

tools=[YFinanceTools(stock_price=True, company_info=True, analyst_recommendations=True, company_news=True)],

# This setting tells the LLM to format messages in markdown

markdown=True,

add_datetime_to_instructions=True,

debug_mode=debug_mode,

)

team.append(_investment_assistant)

extra_instructions.extend(

[

"To get an investment report on a stock, delegate the task to the `Investment Assistant`. "

"Return the report in the <report_format> to the user without any additional text like 'here is the report'.",

"Answer any questions they may have using the information in the report.",

"Never provide investment advise without the investment report.",

]

)

we select and manage the LLM (Large Language Model) in a Streamlit application. It presents a dropdown menu for selecting between “gpt-4o” and “gpt-4-turbo” models. The selected model is stored in the session state. If the user changes the model, the session state is updated, and the assistant is restarted to use the new model. This ensures the application always uses the user-selected LLM model.

# Get LLM Model

llm_id = st.sidebar.selectbox("Select LLM", options=["gpt-4o", "gpt-4-turbo"]) or "gpt-4o"

# Set llm_id in session state

if "llm_id" not in st.session_state:

st.session_state["llm_id"] = llm_id

# Restart the assistant if llm_id changes

elif st.session_state["llm_id"] != llm_id:

st.session_state["llm_id"] = llm_id

restart_assistant()

Use checkboxes in the Streamlit sidebar to enable web search using DuckDuckGo and finance tools using Yahoo Finance. It manages the state of these settings using the session state feature of Streamlit. When the checkbox values change, it updates the session state and calls a restart_assistant() function, which presumably refreshes or restarts the AI assistant based on the new settings

# Enable Web Search via DuckDuckGo

if "ddg_search_enabled" not in st.session_state:

st.session_state["ddg_search_enabled"] = True

# Get ddg_search_enabled from session state if set

ddg_search_enabled = st.session_state["ddg_search_enabled"]

# Checkbox for enabling web search

ddg_search = st.sidebar.checkbox("Web Search", value=ddg_search_enabled, help="Enable web search using DuckDuckGo.")

if ddg_search_enabled != ddg_search:

st.session_state["ddg_search_enabled"] = ddg_search

ddg_search_enabled = ddg_search

restart_assistant()

# Enable finance tools

if "finance_tools_enabled" not in st.session_state:

st.session_state["finance_tools_enabled"] = True

# Get finance_tools_enabled from session state if set

finance_tools_enabled = st.session_state["finance_tools_enabled"]

# Checkbox for enabling shell tools

finance_tools = st.sidebar.checkbox("Yahoo Finance", value=finance_tools_enabled, help="Enable finance tools.")

if finance_tools_enabled != finance_tools:

st.session_state["finance_tools_enabled"] = finance_tools

finance_tools_enabled = finance_tools

restart_assistant()

Create a checkbox in the Streamlit sidebar to enable a “Research Assistant” feature that uses a tool called “Exa”. It manages the state of this setting using the session state feature of Streamlit. When the checkbox value changes, it updates the session state and calls a restart_assistant() function, which presumably refreshes or restarts the AI assistant based on the new settings.

# Enable Research Assistant

if "research_assistant_enabled" not in st.session_state:

st.session_state["research_assistant_enabled"] = True

# Get research_assistant_enabled from session state if set

research_assistant_enabled = st.session_state["research_assistant_enabled"]

# Checkbox for enabling web search

research_assistant = st.sidebar.checkbox(

"Research Assistant",

value=research_assistant_enabled,

help="Enable the research assistant (uses Exa).",

)

if research_assistant_enabled != research_assistant:

st.session_state["research_assistant_enabled"] = research_assistant

research_assistant_enabled = research_assistant

restart_assistant()

We also have a checkbox in the Streamlit sidebar to enable a “Python Assistant” feature in a Streamlit app. It initializes a session state variable to track whether the assistant is enabled, creates a checkbox in the sidebar for the user to toggle this feature, and updates the session state and local state variables accordingly if the user changes the checkbox value. If there is a change, it triggers a function (restart_assistant()) to handle any necessary actions due to this state change.

# Enable Python Assistant

if "python_assistant_enabled" not in st.session_state:

st.session_state["python_assistant_enabled"] = True

# Get python_assistant_enabled from session state if set

python_assistant_enabled = st.session_state["python_assistant_enabled"]

# Checkbox for enabling web search

python_assistant = st.sidebar.checkbox(

"Python Assistant",

value=python_assistant_enabled,

help="Enable the Python Assistant for writing and running python code.",

)

if python_assistant_enabled != python_assistant:

st.session_state["python_assistant_enabled"] = python_assistant

python_assistant_enabled = python_assistant

restart_assistant()

sets up a checkbox in the Streamlit sidebar to enable a “Python Assistant” feature that helps with writing and running Python code. It manages the state of this setting using the session state feature of Streamlit. When the checkbox value changes, it updates the session state and calls a restart_assistant() function, which presumably refreshes or restarts the AI assistant based on the new settings.

# Enable Investment Assistant

if "investment_assistant_enabled" not in st.session_state:

st.session_state["investment_assistant_enabled"] = True

# Get investment_assistant_enabled from session state if set

investment_assistant_enabled = st.session_state["investment_assistant_enabled"]

# Checkbox for enabling web search

investment_assistant = st.sidebar.checkbox(

"Investment Assistant",

value=investment_assistant_enabled,

help="Enable the investment assistant. NOTE: This is not financial advice.",

)

if investment_assistant_enabled != investment_assistant:

st.session_state["investment_assistant_enabled"] = investment_assistant

investment_assistant_enabled = investment_assistant

restart_assistant()

we get an “agent” in a Streamlit app. It checks if the agent already exists in the session state. If not, it logs a message and creates a new agent using the get_agent function, passing in various feature flags (e.g., whether certain assistants or tools are enabled). The new agent is then stored in the session state. If the agent already exists in the session state, it is simply retrieved and assigned to the local variable agent.

# Get the agent

agent: Assistant

if "agent" not in st.session_state or st.session_state["agent"] is None:

logger.info(f"---*--- Creating {llm_id} Agent ---*---")

agent = get_agent(

llm_id=llm_id,

ddg_search=ddg_search_enabled,

finance_tools=finance_tools_enabled,

python_assistant=python_assistant_enabled,

research_assistant=research_assistant_enabled,

investment_assistant=investment_assistant_enabled,

)

st.session_state["agent"] = agent

else:

agent = st.session_state["agent"]

let’s manage the interaction between a user and an AI assistant within a Streamlit app. It loads existing chat history from the agent’s memory and displays it. It provides an input field for the user to submit prompts. If the user submits a prompt, the assistant generates a response, which is displayed in real time and added to the chat history. The entire interaction history is stored in the session state to maintain continuity across interactions.

# Load existing messages

assistant_chat_history = agent.memory.get_chat_history()

if len(assistant_chat_history) > 0:

logger.debug("Loading chat history")

st.session_state["messages"] = assistant_chat_history

else:

logger.debug("No chat history found")

st.session_state["messages"] = [{"role": "assistant", "content": "Ask me questions..."}]

# Prompt for user input

if prompt := st.chat_input():

st.session_state["messages"].append({"role": "user", "content": prompt})

# Display existing chat messages

for message in st.session_state["messages"]:

if message["role"] == "system":

continue

with st.chat_message(message["role"]):

st.write(message["content"])

# If last message is from a user, generate a new response

last_message = st.session_state["messages"][-1]

if last_message.get("role") == "user":

question = last_message["content"]

with st.chat_message("assistant"):

response = ""

resp_container = st.empty()

for delta in agent.run(question):

response += delta # type: ignore

resp_container.markdown(response)

st.session_state["messages"].append({"role": "assistant", "content": response})

Conclusion :

Today we learned about Phidata, a simple and useful AI tool. PhiData is a powerful suite of tools used to build AI assistants and interact with users. Users can create their own AI assistant through PhiData and use it to automate various tasks and obtain information

I hope you found this tutorial helpful, stay tuned to this series because I’m pretty sure there’s a lot more to come. In the next article, I can’t wait to see what you will build based on what you have learned here.

🧙♂️ I am AI application expert! If you are looking for Generative AI Engineer, drop an inquiry here or Book a 1-On-1 Consulting Call With Me.

📚Feel free to check out my other articles:

Else See: LangGraph + Adaptive Rag + LLama3 Python Project: Easy AI/Chat For Your Docs 2024

Else See : LangGraph + Corrective RAG + Local LLM = Powerful Rag Chatbot 2024

Else See: LangChain + RAG Fusion + GPT-4o Python Project: Easy AI/Chat for your Docs

reference :

Phidata : https://www.phidata.com/