in this article, we are going to build a chat with your Image application using LLava and Replicate. Whether you are a beginner or an experienced programmer, I am going to explain every step in detail to deliver the information.

For those looking for a quick and easy way to create an awesome user interface for web apps, the Streamlit library is a solid option.

Try it yourself

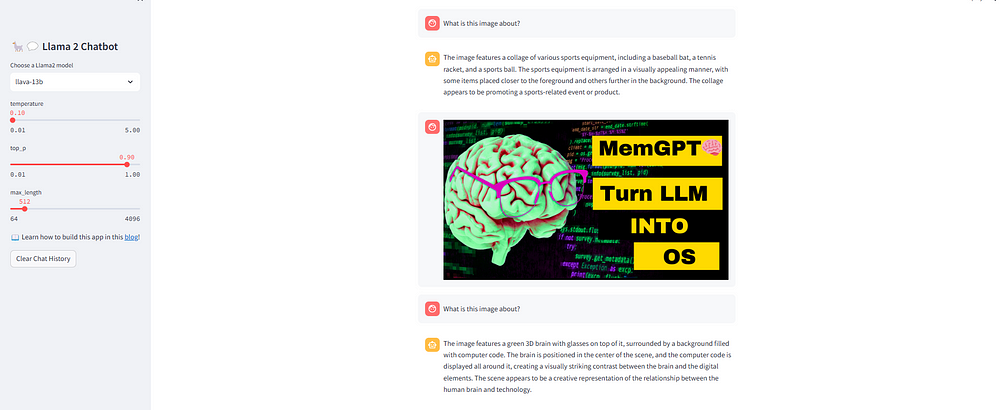

Upload your image, and then you can ask the AI questions about the image. For example, you could ask, “What is this image about?” LLaVA will then respond to your question with a text answer.

Here’s an example:

What is LLava Chatbot?

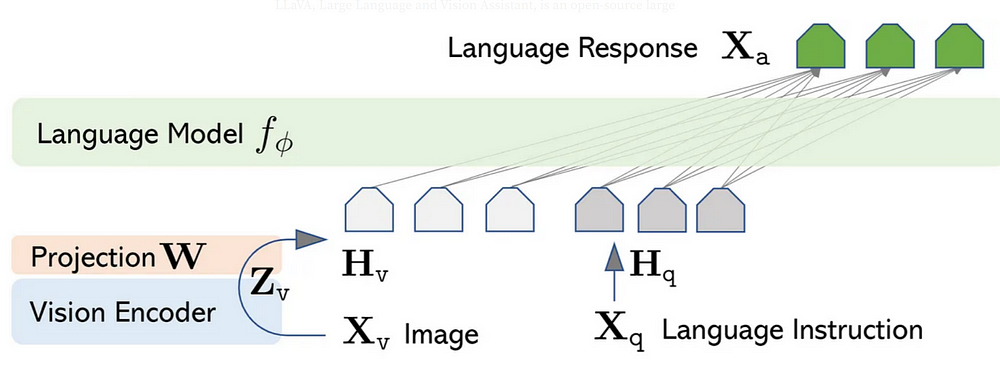

LLaVA, Large Language and Vision Assistant is an open-source large multimodal model (LMM) that combines a pre-trained CLIP ViT-L/14 visual encoder and a large language model (llms) Vicuna using a simple projection matrix for general-purpose visual and language understanding. It was developed by Microsoft Research and released in September 2023.

LLaVA represents the first end-to-end trained LMM to achieve impressive chat capabilities mimicking the spirits of the multimodal GPT-4. It is also a cost-efficient approach to building general-purpose multimodal assistants.

Key Features of LLava:

- Multimodal Instruction Generation: LLava uses a language-only model to generate language-image instruction pairs, making it easier to follow instructions in the multimodal domain.

- Fine-tuning Capabilities: LLaVA can be fine-tuned on specific tasks, such as science question answering, to enhance its performance in domain-specific applications.

- Large Language and Vision Model: LLaVA-1.5 combines a vision encoder with a powerful language model, allowing it to understand and generate content in both visual and textual formats.

What is Replicate?

Replicate is an alternative way to host the models on a cloud platform and use the LLM via API calls.

Which models are available for us to use?

We going to use llava-1.5 models (llava-13b)

Ready to get started?

Else See: how MemGPT 🧠 turn LLM into the operating system

1 — Get a Replicate API token

Here’s how you can get your Replicate API token.

1 — Visit https://replicate.com/signin/.

3. Go to the API tokens page and copy your API token

2- Set up the coding environment

1. You want to start by creating a Venv on your local machine.

First, open your terminal and create a virtual environment.

python -m venv venv

then activate it:

venv\Scripts\activate

You should see (Venv) in the terminal now.

Now, let’s install the required dependencies:

streamlit

replicate

python-dotenv

3. Build the app

- Define the app Title

You can easily set the title of the app shown on the browser using the “page_title” parameter.

st.set_page_config(page_title="🦙💬 Llava 2 Chatbot")

When developing the chatbot app, divide the elements as follows:

- Put the app title and creates a dropdown select box It has one option ‘llava-13b’ you can add more model if you want

- Use if-else statements in the sidebar to handle different cases:

- checks if the selected model is ‘llava-13b’. If it is, it sets the variable

llmto a model - Create a temperature to control the randomness of the output, minimum value of 0.01, a maximum value of 5.0, default value of 0.1

- max_length which controls the maximum length of the generated text.

with st.sidebar:

st.title('🦙💬 Llava Chatbot')

selected_model = st.sidebar.selectbox('Choose a llava model', ['llava-13b'], key='selected_model')

if selected_model == 'llava-13b':

llm = 'yorickvp/llava-13b:2facb4a474a0462c15041b78b1ad70952ea46b5ec6ad29583c0b29dbd4249591'

temperature = st.sidebar.slider('temperature', min_value=0.01, max_value=5.0, value=0.1, step=0.01)

top_p = st.sidebar.slider('top_p', min_value=0.01, max_value=1.0, value=0.9, step=0.01)

max_length = st.sidebar.slider('max_length', min_value=64, max_value=4096, value=512, step=8)

st.markdown('📖 Learn how to build a llava chatbot [blog](#link-to-blog)!')

4. Add file uploader for images

- when the line executed is a Streamlit app, it will display a file upload button that users can click to open their file browser and select a file to upload.

uploaded_file = st.file_uploader("Upload an image", type=["jpg", "png", "jpeg"])

5. Create the LLM response generation function

- The generate_llava_response() function is a custom function that helps the LLM (Language Model) respond to users’ prompts or messages.

- It maintains a history of the conversation to understand the context better. When you give a prompt, it adds it to the conversation history, and then it uses this history to call the LLM model to generate a response.

- The model thinks about the conversation history and produces a new message as a reply. This way, it tries to understand the conversation and give a meaningful and relevant response.

def generate_llava_response(prompt_input):

string_dialogue = "You are a helpful assistant. You do not respond as 'User' or pretend to be 'User'. You only respond once as 'Assistant'."

for dict_message in st.session_state.messages:

if dict_message["role"] == "user":

if dict_message.get("type") == "image":

string_dialogue += "User: [Image]\\n\\n"

else:

string_dialogue += "User: " + dict_message["content"] + "\\n\\n"

else:

string_dialogue += "Assistant: " + dict_message["content"] + "\\n\\n"

output = replicate.run(llm,

input={"image": uploaded_file, "prompt": prompt})

return outputu today?"}]

6. Add, display, and clear chat messages

The code creates a place to store chat messages in a variable called messages.

It displays the chat messages one by one on the screen using Streamlit’s st.chat_message() function.

The code also adds a button labelled “Clear Chat History” in the sidebar.

When the button is clicked, the chat history is cleared.

# Ensure session state for messages if it doesn't exist

if "messages" not in st.session_state:

st.session_state.messages = [{"role": "assistant", "content": "How may I assist you today?"}]

# Display or clear chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

if message.get("type") == "image":

st.image(message["content"])

else:

st.write(message["content"])

# Sidebar to clear chat history

def clear_chat_history():

st.session_state.messages = [{"role": "assistant", "content": "How may I assist you today?"}]

st.sidebar.button('Clear Chat History', on_click=clear_chat_history)

7. Accept prompt input

- In simpler terms, there’s a chat input box where the user can type a message.

- Whatever the user types will be added to the list of previous messages in the chat session. This way, the conversation history keeps growing with each message the user sends.

# Text input for chat

prompt = st.text_input("Type a message:")

# Button to send the message/image

if st.button('Send'):

if uploaded_file:

# If an image is uploaded, store it in session_state

st.session_state.messages.append({"role": "user", "content": uploaded_file, "type": "image"})

if prompt:

st.session_state.messages.append({"role": "user", "content": prompt})

8. Generate a new LLM response

- If the last message in the chat wasn’t from the assistant, the assistant will generate a new response.

- and if so, to generate a new message from the assistance, show a spinner while thinking, and then display the message incrementally as it’s being “typed” out. After the message is fully generated, it’s added to the conversation history.

Wrapping up

Congratulations! That is all it takes to make a llava chatbot using Streamlit and replicate.

The next steps depend on your imagination and creativity for better performance of the chatbot.

Drop a comment to share what you are working on🔥👇

I hope you found this tutorial helpful, Stay tuned to this series because I’m pretty sure there’s a lot more to come.

In the next article, I can’t wait to see what you will build based on what you have learned here.

Thanks for reading, and keep spreading your love, Cheers!

the code can be found on my GitHub

Else See: how Powerful AutoGen is Reshaping LLM

This article was a pleasure to read. Your insights are really valuable and thought-provoking. Great job!

Thank you so much, if you are interested in AI Space Please consider subscribe my channel.https://www.youtube.com/@GaoDalie_AI/videos