Navigating the world of prompt engineering can be challenging, even for those seeking to unlock the potential of AI language models such as GPT-4.

This craft involves creating instructions that are clear, unbiased, and specific enough to guide AI towards better Answers.

It’s not just about avoiding confusion or misinterpretation, but also about ensuring AI doesn’t respond to biased, offensive, or overly wordy content.

For those who master prompt engineering (prompt Langchain), it opens up a world of possibilities for more effective, ethical interactions with AI.

In this easy-to-follow guide, we are going to see a complete example of how to use Step Back Prompting to improve performance.

I am thrilled to try out Langchain AI’s new support for Prompting, especially as someone passionate about Prompting

What is Step Back Prompting?

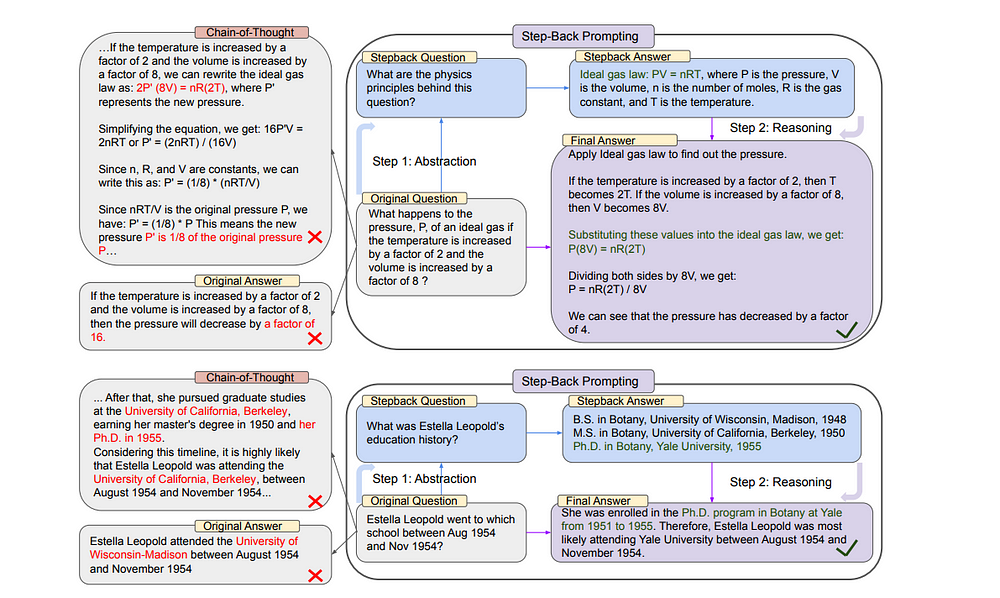

STEP-BACK PROMPTING is a simple prompting technique that enables large language models (LLMs) to derive high-level concepts and first principles from specific instances, significantly improving their abilities to follow a correct reasoning path towards the solution.

It is motivated by the observation that many tasks contain a lot of details, and is hard for LLMs to retrieve relevant facts to tackle the task.

put simply, Step-Back prompting can improve performance on complex questions by first asking a “step back” question.

This can be combined with regular question-answering applications by then doing retrieval on both the original and step-back questions.

What does step-back prompting do to solve problems?

STEP-BACK PROMPTING solves the problem of reasoning failures in the intermediate steps of complex tasks such as knowledge-intensive QA, multi-hop reasoning, and science questions.

These tasks are known to be challenging due to the amount of detail involved in reasoning through them.

LLMs such as PaLM-2L can struggle to retrieve relevant facts to tackle the task and can deviate from the first principle of Ideal Gas Law when reasoning directly on the question.

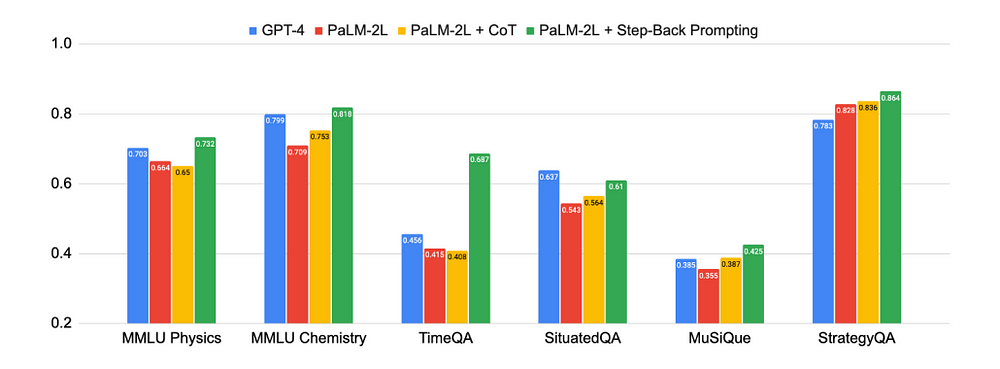

This chart shows the strong performance of Step-Back Prompting

How does STEP-BACK PROMPTING work?

STEP-BACK PROMPTING works by prompting the LLM to take a step back from the specific instance and reason about the general concept or principle behind it.

STEP-BACK PROMPTING leads to substantial performance gains on a wide range of challenging reasoning-intensive tasks.

Specifically, they demonstrate that PaLM-2L models achieve performance improvements of up to 11% on MMLU Physics and Chemistry, 27% on TimeQA, and 7% on MuSiQue with STEP-BACK PROMPTING.

The Algorithm concept :

- Generate a Step back prompting based on the user’s original question

- Collect data related to both the original query and stepback question

- Formulate a response by considering the gathered information from both queries

Now let’s get practical!

1. Install Necessary Packages and Import Dependencies:

Begin by installing the required Python packages using ‘pip’ and importing the necessary dependencies.

#require Python packages

!pip install langchain

!pip install openai

!pip install duckduckgo-search

!pip install langchainhub

#importing the necessary dependencies

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate, FewShotChatMessagePromptTemplate

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.runnable import RunnableLambda

from langchain.utilities import DuckDuckGoSearchAPIWrapper

from langchain import hub

import os

import openai

Finally, we’ll need to set an environment variable for the OpenAI API key:

os.environ["OPENAI_API_KEY"] = <YOUR_API_KEY>

let’s explain the code

Examples: in the form of dictionaries to be included in the final prompt.

Example_prompt: It transforms each example by generating one or more messages using its format_messages method. Typically, this involves converting an example into a human message and an AI message response, or a human message followed by a functional call message.

let’s set up a few_shot_prompt to dynamically select examples based on an input, and then format the examples in a final prompt to provide for the model. It achieves this by defining a conversation template that consists of both user input and expected model output.

# Few Shot Examples

examples = [

{

"input": "Could the members of The Police perform lawful arrests?",

"output": "what can the members of The Police do?"

},

{

"input": "Jan Sindel’s was born in what country?",

"output": "what is Jan Sindel’s personal history?"

},

]

# We now transform these to example messages

example_prompt = ChatPromptTemplate.from_messages(

[

("human", "{input}"),

("ai", "{output}"),

]

)

few_shot_prompt = FewShotChatMessagePromptTemplate(

example_prompt=example_prompt,

examples=examples,

)

We going to Prepare the chain to generate a step-back prompt

question_gen = prompt | ChatOpenAI(temperature=0) | StrOutputParser()

question = "was chatgpt around while trump was president?"

question_gen.invoke({"question": question})

The question was asked, was chatgpt around while trump was president?

The step-back question generated is: when was ChatGPT developed ?

from langchain.utilities import DuckDuckGoSearchAPIWrapper

search = DuckDuckGoSearchAPIWrapper(max_results=4)

def retriever(query):

return search.run(query)

Preparing Retriever to collect information from web searches.

retriever(question)

Execute the retriever to gather information based on the initial question,

Here is the output text

'This includes content about former President Donald Trump.

According to further tests, ChatGPT successfully wrote poems admiring all

recent U.S. presidents, but failed when we entered a query for ... On

Wednesday, a Twitter user posted screenshots of him asking OpenAI\'s chatbot,

ChatGPT, to write a positive poem about former President Donald Trump, to

which the chatbot declined, citing it ... While impressive in many respects,

ChatGPT also has some major flaws. ... [President\'s Name]," refused to

write a poem about ex-President Trump, but wrote one about President Biden ...

During the Trump administration, Altman gained new attention as a vocal critic

of the president. It was against that backdrop that he was rumored to be

considering a run for California governor.'

Execute the retriever to gather information based on Step-back Prompting

retriever(question_gen.invoke({"question": question}))

Will Douglas Heaven March 3, 2023 Stephanie Arnett/MITTR | Envato When OpenAI

launched ChatGPT, with zero fanfare, in late November 2022,

the San Francisco-based artificial-intelligence company... ChatGPT,

which stands for Chat Generative Pre-trained Transformer, is a large language

model -based chatbot developed by OpenAI and launched on November 30, 2022,

which enables users to refine and steer a conversation towards a desired

length, format, style, level of detail, and language. ChatGPT is an artificial

intelligence (AI) chatbot built on top of OpenAI's foundational large language

models (LLMs) like GPT-4 and its predecessors. This chatbot has redefined the

standards of... June 4, 2023 ⋅ 4 min read 124 SHARES 13K At the end of 2022,

OpenAI introduced the world to ChatGPT. Since its launch, ChatGPT hasn't shown

significant signs of slowing down in developing new..

let’s get the “ step-back context “ prompt templates from Langchain Hub

from langchain import hub

response_prompt = hub.pull("langchain-ai/stepback-answer")

The contents of this prompt template are as follows:

# response_prompt_template = """You are an expert of world knowledge. I am going to ask you a question. Your response should be comprehensive and not contradicted with the following context if they are relevant. Otherwise, ignore them if they are not relevant.

# {normal_context}

# {step_back_context}

# Original Question: {question}

# Answer:"""

# response_prompt = ChatPromptTemplate.from_template(response_prompt_template)

we going to create a Chain that uses the”stepback-answer” prompt template and provides the regular context, stepback context, and the question as inputs or arguments.

A RunnableLambda transforms a Python callable into a form that can be executed either synchronously or asynchronously.

chain = {

# Retrieve context using the normal question

"normal_context": RunnableLambda(lambda x: x['question']) | retriever,

# Retrieve context using the step-back question

"step_back_context": question_gen | retriever,

# Pass on the question

"question": lambda x: x["question"]

} | response_prompt | ChatOpenAI(temperature=0) | StrOutputParser()

Preparing a chain that uses the”stepback-answer” prompt template.

chain.invoke({"question": question})

here is the correct response below:

No, ChatGPT was not around while Donald Trump was president.

ChatGPT was launched on November 30, 2022, which is after Donald Trump's

presidency.

if we don’t use Step back

Let’s check the result of the question-answer without stepping back.

we going to prepare the prompt template

response_prompt_template = """You are an expert of world knowledge. I am going to ask you a question. Your response should be comprehensive and not contradicted with the following context if they are relevant. Otherwise, ignore them if they are not relevant.

{normal_context}

Original Question: {question}

Answer:"""

response_prompt = ChatPromptTemplate.from_template(response_prompt_template)

Chain Preparation

chain = {

# Retrieve context using the normal question (only the first 3 results)

"normal_context": RunnableLambda(lambda x: x['question']) | retriever,

# Pass on the question

"question": lambda x: x["question"]

} | response_prompt | ChatOpenAI(temperature=0) | StrOutputParser()

let’s execute the code

chain.invoke({"question": question})

here is the incorrect response below:

Yes, ChatGPT was around while Donald Trump was president. However,

it is important to note that the specific context you provided mentions

that ChatGPT refused to write a positive poem about former President Donald

Trump

Conclusion :

Through this tutorial, we have learned how to use step-back prompting to improve the performance that can shape the behaviour and responses of these models.

All-in-all I am pretty impressed with step-back prompting. it’s a very cool tool to have in your tool belt as time goes on, it’s only going to get better.

Else See: how MemGPT 🧠 turn LLM into the operating system

Else See: how Powerful AutoGen is Reshaping LLM