in this post, I will be showing you step-by-step how to create your Ai chatbot and on top of that I am going to show you how to reduce the cost of tokens and decrease API response latency

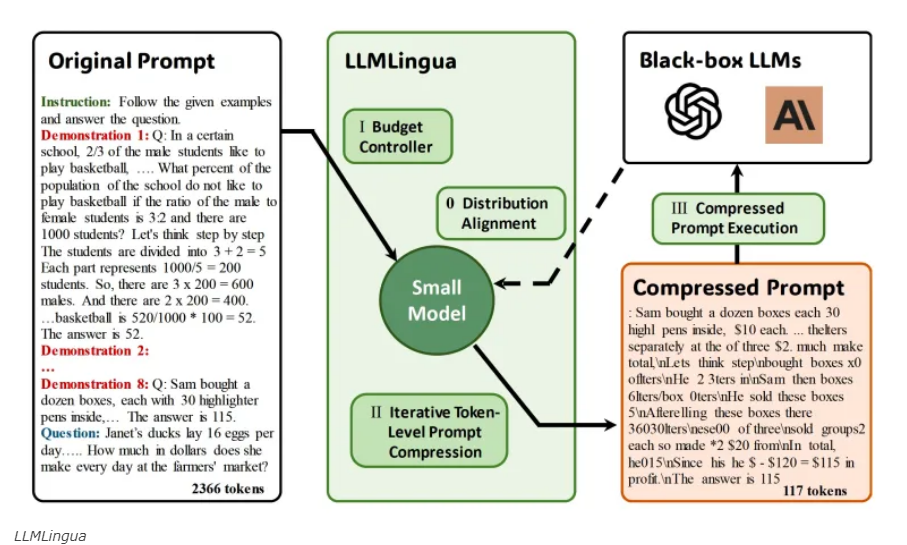

On December 7th, Microsoft researchers announced “LLMLingua,’’ a new technology that highly compresses prompts given to large-scale language models (LLMs).

LLMLingua allows you to significantly shorten long prompts while retaining their meaning.

in this Post, we will cover what is LLMlingua, what its its key features and functionalities, and how to implement LLMlingua with LlamaIndex and Rag to develop a cost-effective chatbot solution.

What is LLmLingua?

LLMLingua is a prompt compression method designed for large language models (LLMs). It is a coarse-to-fine algorithm that involves a budget controller to maintain semantic integrity under high compression ratios, a token-level iterative compression algorithm to better model the interdependence between compressed contents, and an instruction-tuning-based method for distribution alignment between natural language models.

Key Features :

The features of LLMLingua include:

1. Coarse-to-fine prompt compression: LLMLingua offers a method for compressing prompts in a coarse-to-fine manner, allowing for both demonstration-level compression to maintain semantic integrity under high compression ratios and token-level iterative compression to better model the interdependence between compressed contents.

2. Budget controller: LLMLingua includes a budget controller to dynamically allocate different compression ratios to various components in the original prompts, such as the instruction, demonstrations, and questions.

3. Alignment method: LLMLingua provides an instruction tuning-based method for distribution alignment between language models, addressing the challenge of distribution discrepancy between the target LLM and the small language model used for prompt compression.

4. State-of-the-art performance: LLMLingua has been shown to achieve state-of-the-art performance across multiple datasets, demonstrating its effectiveness in prompt compression while maintaining semantic integrity and allowing for up to 20x compression ratio with minimal performance loss.

5. Practical implications: LLMLingua not only reduces computational costs but also offers a potential solution for accommodating longer contexts in large language models, contributing to improved inference efficiency and reduced text length in generated outputs.

Else See : Microsoft PHI-2 + Huggine Face + Langchain = Super Tiny Chatbot

How does Llmlingua reduce cost and decrease API response latency?

LLMLingua reduces cost, decreases API response latency and accelerates model inference through prompt compression, semantic Integrity Maintenance and Efficient Inference which optimizes the use of computational resources for large language models (LLMs).

Without much delay let’s jump to the practical implementation of LLMLingua and LlamaIndex.

let’s start installing the requirements. Assuming you have created a new Python project and set up a virtual environment, run the command:

# Install dependency.

!pip install llmlingua llama-index

let’s import the required dependencies and set your OpenAI API key.

import openai

openai.api_key = "<insert_openai_key>"

let’s use wget to download a file

!wget "https://www.dropbox.com/s/f6bmb19xdg0xedm/paul_graham_essay.txt?dl=1" -O paul_graham_essay.txt

we going to import VectorStoreIndex, SimpleDirectoryReader,load_index_from_storage, and StorageContext from Llama_index

The SimpleDirectoryReader is used to read documents from a directory t’s initialized withinput_files=["paul_graham_essay.txt"], indicating that it will read a file named paul_graham_essay.txt. The load_data() method is then called on this instance, which presumably loads the content of the file into the documents variable.

from llama_index import (

VectorStoreIndex,

SimpleDirectoryReader,

load_index_from_storage,

StorageContext,

)

documents = SimpleDirectoryReader(

input_files=["paul_graham_essay.txt"]

).load_data()

VectorStoreIndex using the method from_documents. This method takes the documents variable (which likely contains the content of documents loaded in a previous step) and creates an index.

Then we use as_retriever the method called on the previously created index. This method sets up a retriever for the indexed documents. The similarity_top_k=10 parameter indicates that the retriever will return the top 10 most similar documents when queried.

index = VectorStoreIndex.from_documents(documents)

retriever = index.as_retriever(similarity_top_k=10)

# question = "What did the author do growing up?"

# question = "What did the author do during his time in YC?"

question = "Where did the author go for art school?"

here is the answer

# Ground-truth Answer

answer = "RISD"

this code snippet is part of a process where a question or query is used to retrieve relevant documents from an indexed corpus. The retriever finds documents that are similar or relevant to the question. The content of these documents is then extracted and stored in a list, and the number of retrieved documents is determined

contexts = retriever.retrieve(question)

context_list = [n.get_content() for n in contexts]

len(context_list)

we import OpenAI from llama_index.llms to generate a response to a given question, informed by a set of relevant documents. The documents are combined with the question to form a prompt, which is then fed into the language model.

# The response from original prompt

from llama_index.llms import OpenAI

llm = OpenAI(model="gpt-3.5-turbo-16k")

prompt = "\n\n".join(context_list + [question])

response = llm.complete(prompt)

print(str(response))

here is what we get

The author went to the Rhode Island School of Design (RISD) for art school.

we going to set up a LongLLMLinguaPostprocessor to refine responses generated by a language model. It specifies the instructions for postprocessing, the desired length of the output, and various parameters for compressing and organizing the context

from llama_index.query_engine import RetrieverQueryEngine

from llama_index.response_synthesizers import CompactAndRefine

from llama_index.indices.postprocessor import LongLLMLinguaPostprocessor

node_postprocessor = LongLLMLinguaPostprocessor(

instruction_str="Given the context, please answer the final question",

target_token=300,

rank_method="longllmlingua",

additional_compress_kwargs={

"condition_compare": True,

"condition_in_question": "after",

"context_budget": "+100",

"reorder_context": "sort", # enable document reorder,

"dynamic_context_compression_ratio": 0.3,

},

)

we use a retriever object to retrieve documents relevant to a specified question.

and CompactAndRefine combine text chunks into larger consolidated chunks that more fully utilize the available context window, then refine answers

retrieved_nodes = retriever.retrieve(question)

synthesizer = CompactAndRefine()

let’s import QueryBundle from llama_index to handle search queries It involves taking a set of retrieved data nodes, postprocessing them and then analyzing both the original and processed prompt in terms of their textual content length that aims to optimize the information presented to the user by compressing or summarizing detailed content into more manageable forms.

from llama_index.indices.query.schema import QueryBundle

# outline steps in RetrieverQueryEngine for clarity:

# postprocess (compress), synthesize

new_retrieved_nodes = node_postprocessor.postprocess_nodes(

retrieved_nodes, query_bundle=QueryBundle(query_str=question)

)

original_contexts = "\n\n".join([n.get_content() for n in retrieved_nodes])

compressed_contexts = "\n\n".join([n.get_content() for n in new_retrieved_nodes])

original_tokens = node_postprocessor._llm_lingua.get_token_length(original_contexts)

compressed_tokens = node_postprocessor._llm_lingua.get_token_length(compressed_contexts)

let’s print it out.

print(compressed_contexts)

print()

print("Original Tokens:", original_tokens)

print("Compressed Tokens:", compressed_tokens)

print("Comressed Ratio:", f"{original_tokens/(compressed_tokens + 1e-5):.2f}x")

as you see the process successfully compressed a detail into a much shorter version, retaining key elements of the prompt while greatly reducing its length.

next Rtm's advice hadn' included anything that. I wanted to do something completely different, so I decided I'd paint. I wanted to how good I could get if I focused on it. the day after stopped on YC, I painting. I was rusty and it took a while to get back into shape, but it was at least completely engaging.1]

I wanted to back RISD, was now broke and RISD was very expensive so decided job for a year and return RISD the fall. I got one at Interleaf, which made software for creating documents. You like Microsoft Word? Exactly That was I low end software tends to high. Interleaf still had a few years to live yet. []

the Accademia wasn't, and my money was running out, end year back to the

lot the color class I tookD, but otherwise I was basically myself to do that for in993 I dropped I aroundidence bit then my friend Par did me a big A rent-partment building New York. Did I want it Itt more my place, and York be where the artists. wanted [For when you that ofs you big painting of this type hanging in the apartment of a hedge fund manager, you know he paid millions of dollars for it. That's not always why artists have a signature style, but it's usually why buyers pay a lot for such work. [6]

Original Tokens: 10719

Compressed Tokens: 308

Comressed Ratio: 34.80x

let’s create a response to ask a user a question to retrieve information process it to make it more relevant and then synthesise it to generate a direct response to the user’s query based on this processed information

response = synthesizer.synthesize(question, new_retrieved_nodes)

print(str(response))

here is the result.

The author went to RISD for art school.

Else See : AutoGen + LangChian + SQLite + Function Schema = Super AI Chabot

Conclusion :

The collaboration between LLMLingua and LlamaIndex marks a significant advancement in the application of large language models (LLMs).

This partnership is revolutionizing the field by introducing innovative on-the-fly compression techniques and enhancing inference efficiency. These developments are crucial in creating context-sensitive, efficient LLM applications.

This integration doesn’t just speed up inference; it also ensures the preservation of semantic accuracy in the compressed data. By customizing the compression approach using domain-specific insights from LlamaIndex, we strike an optimal balance.

We reduce the length of the data inputs while retaining vital context, which significantly boosts the precision of LLM inferences

Reference :