Zephyr 7B beta is the second model in the series and is a fine-tuned version of mistralai/Mistral-7B-v0.1 that was trained on a mix of publicly available synthetic datasets.

As Language models grow, their capabilities change in unexpected ways, one of the latest innovations in LLM is the zephyr-7b-beta.

it has about 7 billion parameters that can be run easily on a laptop and can answer user questions and generate sentences.

On my Homepage, I am always researching LLM and I am very excited about this zephyr-7b-beta.

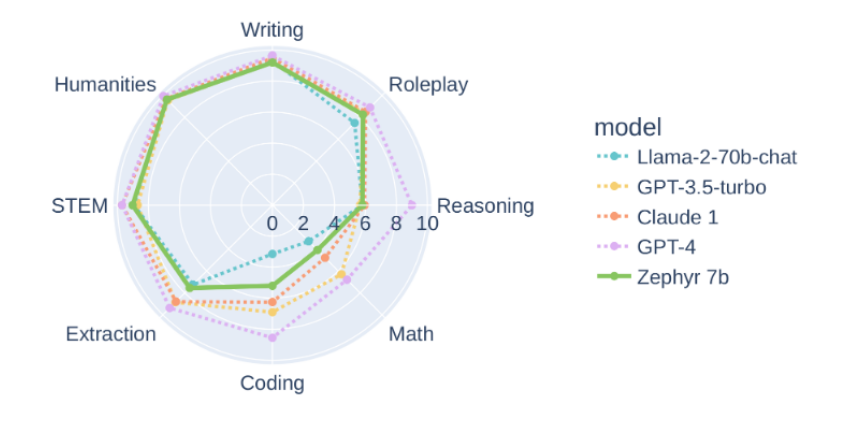

Furthermore, in terms of writing and roleplay, it has achieved accuracy approaching that of GPT-4.

Sound interesting right? In this follow-guide tutorial, I will guide you on how to use zephyr-7b-beta, my impressions after actually using it and finally a comparison with GPT-4

I highly recommend you read this article to the end is a game changer in your chatbot, that will realize the power of zephyr-7b-beta!

What is Zephyr 7b beta?

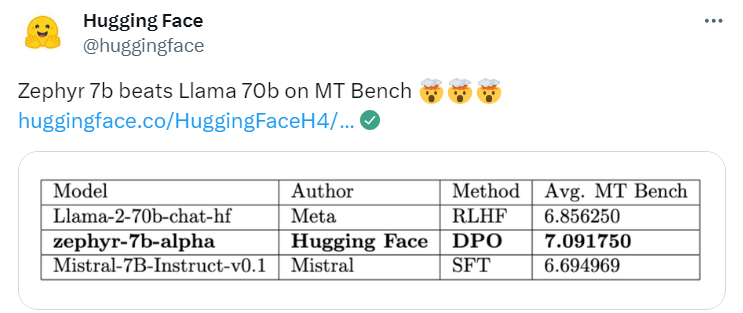

“zephyr 7b beta” is a fine-tuned version of the mode“Mistral” developed by the Hugging Face H4 team that performs similarly to the previous Chat Llama 70B model in multiple benchmark tests and even better results in “MT Bench’’. and is more accurate than Meta’s LLama 2

Zephyr is a series of language models that are trained to act as helpful assistants.

The core strength of this model lies in its capacity to tap into a wide range of web data and technical sources

Since it is still a beta version, it is an LLM that I would like to keep an eye on for further improvements and advances in the future.

Check the model Performance :

On MT-Bench, zephyr 7b β scored 7.34, while Llama 2 Chat 70B scored 6.86; on AlpacaEval, Zephyr’s win rate was 90.6%, while Llama 2 Chat 70B’s win rate was 92.7%. These results show that Zephyr Beta is a very good model.

we found that Removing the built-in alignment of the datasets improved performance on MT Bench, making the model more useful. However, this change might lead the model to generate problematic text, so it should be used only for educational and research purposes.

How is the model trained?

The Zephyr Beta training process is fascinating, it not only contains excellent indicators but also contains some unique training components

Everyone is testing the effect of Zephyr, but developers say that the most interesting thing is not the indicators, but the training method of the model.

Else See: how MemGPT 🧠 turn LLM into the operating system

Pre-trained model Mistral 7B

- Mistral-7B-v0.1 is a small, yet powerful model adaptable to many use cases. Mistral 7B is better than Llama 2 13B on all benchmarks, has natural coding abilities, and has an 8k sequence length.

Large-scale preference dataset UltraFeedback

- UltraFeedback is a large-scale, fine-grained, and diverse preference dataset.

- Used to train strong reward and critic models. We collected approximately 64,000 prompts from various resources (UltraChat, ShareGPT, Evol-Instruct, TruthfulQA, FalseQA, FLAN, etc.), queried multiple LLMs (see model list table) with these prompts, and generated 4 different responses for a total of 256k samples To collect high-quality preference and textual feedback

Direct Preference Optimization (DPO)

DPO can be explained simply as follows :

- To make the output of the model more consistent with human preferences, the traditional method has been to use a reward model to fine-tune the target model. If the output is good, a reward will be given; if the output is bad, no reward will be given.

- The DPO method bypasses the modelling reward function and is equivalent to optimizing the model directly on the preference data.

- In general, DPO solves the problem of difficulty in training and the high training cost of reinforcement learning based on human feedback.

finally, Overfitting on the preference data set, but surprisingly got better chat results

To expand, as mentioned at the beginning, the reason why Zephyr’s effect can surpass that of 70B Llama 2 is mainly due to the use of special fine-tuning methods.

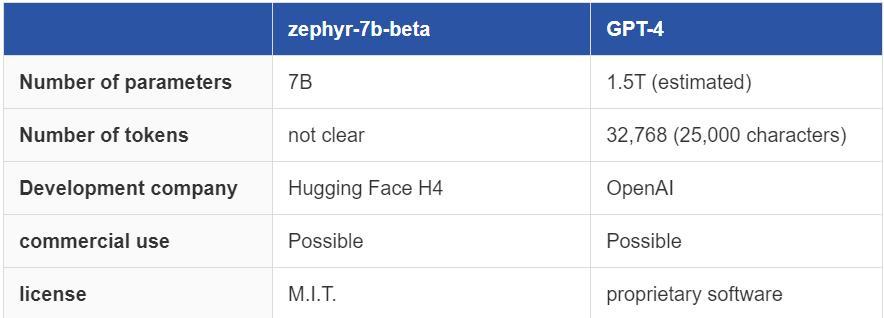

Comparison of gpt-4 vs zephyr-7b-beta

Zephyr is particularly good at translating and summarizing texts but is not good at writing programming code or solving math problems.

As you can see from the diagram above, it has achieved accuracy approaching that of GPT-4 in writing, roleplay, etc. However, the accuracy of Coding and Math is particularly low.

zephyr-7b-beta pricing structure

Zephyr-7B-β is open source, so it is available for free

However, when using LLM, charges are incurred depending on the amount of computational resources used, such as model execution time, amount of memory used, amount of data transferred, etc.

Else See: how Powerful AutoGen is Reshaping LLM

How To Use zephyr-7b-beta?

This time we will run it with Google Colab.

First, run the code below to install the necessary libraries.

!pip install transformers

!pip install accelerate

Next, run the code below thew to load the model and prepare it for text generation.

import torch

from transformers import pipeline

pipe = pipeline("text-generation", model="HuggingFaceH4/zephyr-7b-beta",

torch_dtype=torch.bfloat16, device_map="auto")

Finally, you can use the model to generate text by running the code below.

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me a joke."}

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False,

add_generation_prompt=True)

outputs = pipe(prompt, max_new_tokens=256, do_sample=True, temperature=0.7,

top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

In addition, the following two are defined within the list type message variable above.

- {“role”: “user”, “content”: “Tell me a joke.”}: “Tell me a joke” is the content of the prompt

- {“role”: “system”, “content”: “You are a helpful assistant.”}: The role that “You are a helpful assistant” gives to LLMs

When the above code is executed, the output is as follows.

<|system|>

You are a helpful assistant.</s>

<|user|>

Tell me a joke.</s>

<|assistant|>

Why did the scarecrow win an award?

Because he was outstanding in his field!

The joke was, “Why did Scarecrow win the award? Because he was outstanding in his field!” Will this cause laughter in English-speaking countries?

Conclusion :

“Zephyr-7b-beta” is its tokenizer’s chat template and type of LLM that can understand and generate sentences like a human, and its accuracy is approaching that of GPT-4.

The key is that Zephyr does not use methods such as human feedback reinforcement learning to align with human preferences, nor does it use ChatGPT’s response filtering method.

Also, Zephyr is not good at writing programming code or solving math problems. Also, since sentences are generated based on learned data, they may sometimes contain inappropriate content.

Else See: How Powerful Step-Back Prompting Improves LLM Performance

Reference :

https://huggingface.co/TheBloke/zephyr-7B-beta-GPTQ

https://huggingface.co/HuggingFaceH4/zephyr-7b-beta

https://replicate.com/tomasmcm/zephyr-7b-beta

https://github.com/huggingface/alignment-handbook